Overview

Primary goal of localization is to use noisy inputs from multiple sensors, to estimate, as accurately as possible, the current location of the robot within its environment, and also, in some cases, build a map of its surroundings as it explores the area . Navigation is one of the most challenging competencies required of a mobile robot. Success in navigation requires success at the four building blocks of navigation:

● Perception- the robot must interpret its sensors to extract meaningful data

● Localization- the robot must determine its position in the environment

● Cognition- the robot must decide how to act to achieve its goals

● Motion Control- the robot must modulate its motor outputs to achieve the desired trajectory.

Localization is a recently developed field of robotics which finds its application in self-driving cars, unmanned aerial vehicles, autonomous underwater vehicles, planetary rovers, newer domestic robots and even inside the human body. Localization involves estimating the position of a robot with respect to the surroundings. Knowing the robot’s location as an essential precursor in making decisions about future actions.This concept is critical if you wish to develop any autonomous robot. This concept can be further extended to what is known as Simultaneous Localization and Mapping (SLAM) which involves building a map of the surroundings while localizing the robot in that particular surrounding. Localization algorithms are used in navigation and mapping and odometry of virtual reality or augmented reality

Summer Projects

Implementation of Extended Kalman Filter

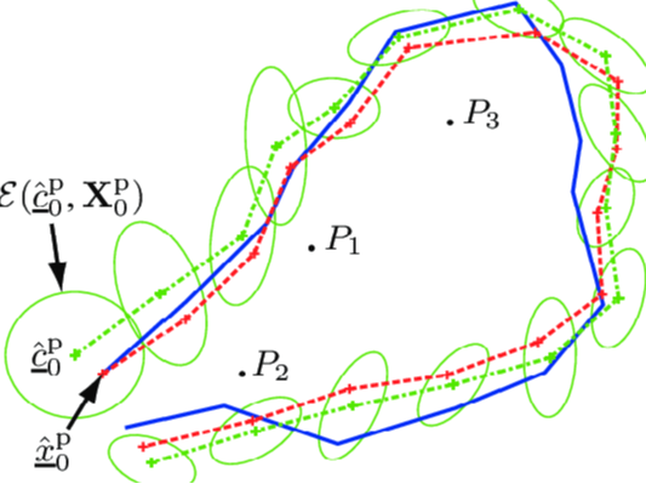

The main idea of the task was to implement an Extended Kalman filter on a non-linear modeI.

Approach

- The inputs were obtained by calibrating the obtained range and bearing measurements of landmarks.

- The model receives linear & angular velocity odometry readings as input and state estimation is carried out on the non-linear motion model by implementing an Extended Kalman Filter.

- The project helped with-

1) LiDAR Sensor data calibration for performing operations upon.

2) Understanding the purpose of an Extended Kalman Filter and the steps involved in implementing one.

Result

Sensor Fusion & State Estimation

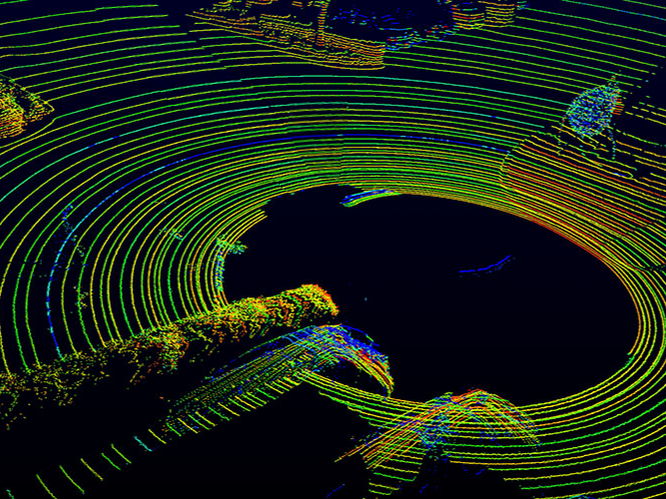

The task involved working on the implementation of error-state extended Kalman filter and localization based on data from different sensors.

Sensors

1) GNSS OR GPS

2) LiDAR

3) IMU Sensor

2) LiDAR

3) IMU Sensor

Approach

- The data from either of the GNSS or LiDAR is used for finding corrected output.

- If GNSS or LiDAR input is not available then the prediction is considered to be an estimation.

- When GNSS or LiDAR input is available then the state is corrected.

Conclusion

This algorithm was successfully tested for cases when either of the sensors failed or gave incorrect readings . It was also tested for the contingency when inputs from any sensors is not available for a short interval of time.

GitHub Repository

Depth Map

To determine depth(distance of particular object from baseline)using a stereo set.

Overview

- Stereo imaging can be used in many applications due to the fact that,based on the geometrical relation between the images,we can construct a depth map of the surrounding by using only images.

- For this purpose we use disparity map which is then converted into depth map with the help of camera calibration parameters such as relative translation and rotation between two cameras.

Concepts used

Camera planes and their inter-relationship

Disparity map

Depth map

Tools and Libraries used

python

Future Developments

- Visual Odometry

- Development of pseudo LIDAR using cameras