OVERVIEW

Visual world is dynamic and we constantly come across video scenes with many moving objects like vehicles , pedestrian etc. Many applications do not need to know everything about the evolution of movement in a video sequence , but the only requirement is , the information of changes in the scene , because an image's regions of interest are objects ( humans, cars, etc. ) in its foreground. After the stage of image preprocessing object localisation is required which may make use of this technique. Background subtraction is a widely used approach for detecting moving objects in videos from static cameras.

CONCEPT

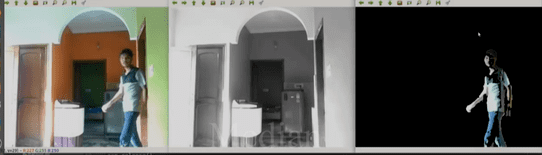

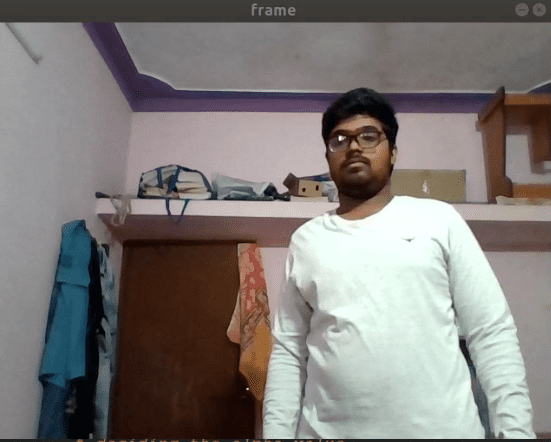

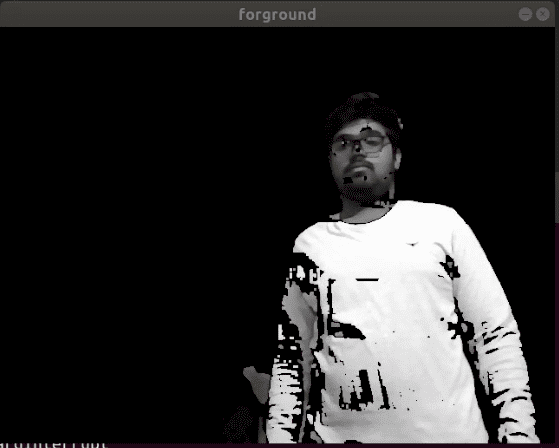

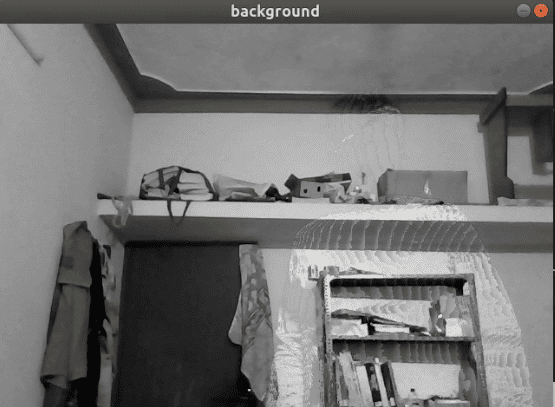

Given a video frame from a static camera observing a scene goal is to separate the moving foreground objects from the static background( part of frame that does not change position with time. Automated analysis of such dynamic video scene activities /event require detection ,classification ,tracking .here we implement the various method to separate out the foreground and background from scene.

METHODS IMPLEMENTED

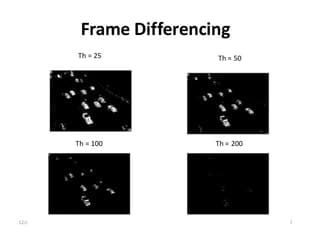

1) Frame Differencing:

|

This method is through the difference between two consecutive images to determine the presence of moving objects. The Frame difference is arguably the simplest form of background subtraction. The basic scheme of background subtraction is to subtract the image from a reference image that models the background scene.

|

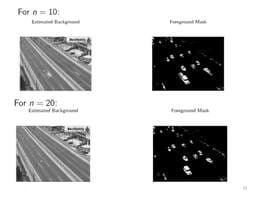

2) Mean Filter:

3) Median Filter:

4) Median Approximation:

- If a pixel in the current frame has a value larger than the corresponding background pixel, the background pixel is incremented by 1.

- Likewise, if the current pixel is less than the background pixel, the background is decremented by one.

- The background eventually converges to an actual background.

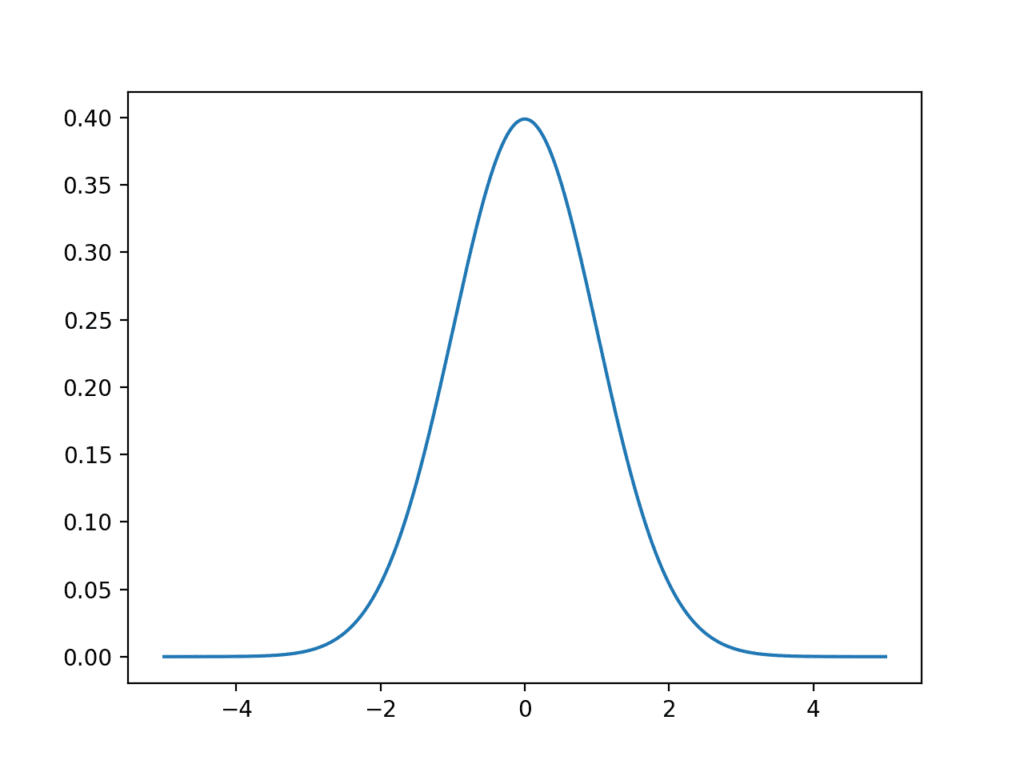

5) Running Gaussian Average:

- For every pixel, fit one Gaussian PDF distribution (µ,σ) on the most recent n frames (this gives the background PDF).

- To accommodate for change in background over time (e.g. due to illumination changes or non-static background objects), at every frame, every pixel's mean and variance must be updated.

6) Gaussian Mixture Model :

- A background pixel may belong to more than one pattern class each having a specific PDF

- Model the history of a pixel distribution by a weighted combination of K adaptive gaussians to cope with multimodal background distributions.

- The parameters of Gaussians - weights, mean and variance are updated accordingly.

|

TEAM MEMBERS:

|

MENTORS:

|

REFERENCE:

1) Paper on Adaptive background mixture models for real-time tracking by Chris Stauffer, W.E.L Grimson

1) Paper on Adaptive background mixture models for real-time tracking by Chris Stauffer, W.E.L Grimson